Introduction: The Invisible Orchestra of Probabilities

Imagine an orchestra performing behind a curtain. You can’t see the musicians, but you can hear the music. The rhythm, the rise and fall of notes — they hint at what’s happening behind that veil. This is precisely what a Hidden Markov Model (HMM) does: it listens to the symphony of data to infer hidden states shaping the visible outcomes.

In the vast domain of machine learning, HMMs are the maestros that conduct patterns where uncertainty reigns. From speech recognition and stock predictions to biological sequence analysis, they interpret signals we can observe to decode what we cannot see. But how do we evaluate how likely a certain sequence of observed data is under an HMM? The answer lies in the Forward Algorithm, the mathematical baton that brings harmony to chaos.

1. The Story Behind the Curtain: Understanding HMM Evaluation

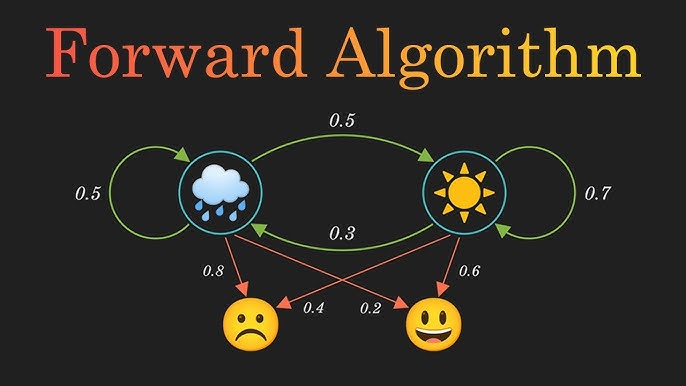

At its heart, an HMM is a probabilistic model that assumes an underlying system transitioning between hidden states, each emitting observable outputs. Think of it like a secret language where each word you hear is influenced by an unseen grammar.

When evaluating an HMM, our goal is to determine: Given a model and a sequence of observed events, how probable is it that the model could have generated this sequence? This is known as the likelihood of an observation sequence.

Instead of brute-forcing through every possible sequence of hidden states — which grows exponentially — the Forward Algorithm allows us to compute this probability efficiently. It’s like decoding a complex melody without replaying every possible arrangement of notes.

For learners exploring statistical modeling in AI, mastering this algorithm is a defining skill. Many professionals discover such techniques during a structured data science course in Mumbai, where HMMs are explored not just theoretically, but through real-world case studies in voice and text analytics.

2. The Forward Algorithm: A Step-by-Step Journey

Let’s unfold the Forward Algorithm as a narrative of progression — a traveler moving through time, step by step, calculating the chances of their path being true.

The process involves three key elements:

- Initialization:

We begin by determining the probability of starting in each hidden state and producing the first observed symbol. It’s like setting our compass before the first move.

[

\alpha_1(i) = \pi_i \cdot b_i(O_1)

]

Here, ( \pi_i ) is the probability of starting in state i, and ( b_i(O_1) ) is the probability of observing the first symbol from that state. - Recursion:

As we progress through each time step, we accumulate the probabilities from previous states transitioning into the current one and emitting the observed output.

[

\alpha_t(j) = \sum_{i=1}^{N} \alpha_{t-1}(i) \cdot a_{ij} \cdot b_j(O_t)

]

It’s like weaving threads from past notes to current tones — ensuring continuity in melody. - Termination:

Finally, we sum the probabilities across all possible ending states to get the overall likelihood:

[

P(O|\lambda) = \sum_{i=1}^{N} \alpha_T(i)

]

The result tells us how “believable” the observed sequence is, given our model.

This recursive elegance of the Forward Algorithm is what makes it an essential concept in probabilistic modeling and machine learning pipelines.

3. The Power of Efficiency: Why the Forward Algorithm Matters

Without the Forward Algorithm, evaluating an HMM would be computationally impractical. If there are N states and T observations, the naive approach would require ( N^T ) computations — a combinatorial explosion. The Forward Algorithm reduces this to ( N^2T ), transforming impossibility into elegance.

This efficiency is what allows applications like speech recognition, part-of-speech tagging, and bioinformatics to operate in real time. Imagine a voice assistant analyzing your speech: each word you utter becomes an observation, and the HMM works behind the scenes to infer the sequence of phonemes that most likely produced it.

For learners diving into applied AI, a structured data scientist course helps demystify such algorithms, bridging mathematical theory with hands-on implementation using Python, TensorFlow, or R.

4. Real-World Analogies: Where We Encounter HMMs Daily

The magic of HMMs is that they quietly power many tools we use every day.

- Speech Recognition Systems: Translate sound waves (observations) into words (hidden states).

- Financial Forecasting: Predict market movements hidden beneath observed fluctuations.

- Natural Language Processing: Tag parts of speech based on word patterns and transitions.

Even when you type a search query and receive accurate suggestions — that’s the spirit of HMMs at play, turning uncertainty into predictability.

Aspiring analysts can experience such algorithms in action through projects offered in a data science course in Mumbai, where classroom learning merges with live datasets and business problems.

Conclusion: The Art of Seeing the Unseen

The Forward Algorithm is more than a mathematical routine — it’s a way of thinking about uncertainty. It reminds us that every visible event may have an invisible cause, and through structured probability, we can estimate the unseen.

In the symphony of data, Hidden Markov Models serve as the quiet conductors, and the Forward Algorithm as their guiding baton. Together, they teach us that true intelligence lies not in seeing everything, but in inferring wisely from what we can observe.

Those who master such concepts through a data scientist course don’t just learn to code models — they learn to read the language of hidden probabilities that govern our digital world.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354

Email: enquiry@excelr.com